Artificial Intelligence Risk Management Framework

The Artificial Intelligence Risk Management Framework (AI RMF 1.0) is a framework that helps organizations meet risk management and compliance requirements when using artificial intelligence (AI).

Table of content

Introduction

The Artificial Intelligence Risk Management Framework (AI RMF 1.0) is a framework to help organizations meet risk management and compliance requirements when deploying artificial intelligence (AI). It was developed by the U.S. government (National Institute of Standards and Technology) and provides a structured method to identify, assess, and manage risks associated with implementing AI systems. The framework includes a set of guidelines to help organizations develop, implement, and monitor AI systems. It also provides a set of tools to help organizations meet compliance requirements. The following content is included in the framework:

AI Risks and Trustworthiness

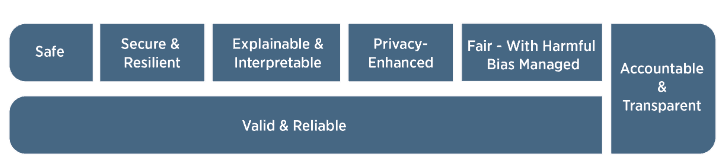

For AI systems to be trusted, they must often respond to a variety of criteria that are of value to interested parties. Approaches that improve AI trustworthiness can reduce negative AI risks. Characteristics of trustworthy AI systems include: valid and reliable, secure, resilient, accountable and transparent, explainable and interpretable, privacy-oriented, and fair. Creating trustworthy AI requires balancing each of these characteristics against the context in which the AI system is used. While all characteristics are socio-technical system attributes, accountability and transparency also relate to the processes and activities within an AI system and its external environment. Neglecting these attributes can increase the likelihood and magnitude of negative consequences.

Valid and reliable is a necessary condition for trustworthiness and is shown as the basis for other trustworthiness characteristics. Accountable and Transparent is shown as a vertical box as it relates to all other characteristics.

Validity and Reliability

Validation is the "confirmation by providing objective evidence that the requirements for a particular intended use or application have been met." Providing AI systems that are inaccurate, unreliable, or poorly generalized to create and increase data and settings beyond their training increases negative AI risks and reduces trustworthiness. Reliability is defined in the same standard as "the ability of an element to function as required, without failure, for a specified period of time under specified conditions." Reliability is a goal for the overall correctness of the operation of an AI system under the conditions of expected use and over a specified period of time, including the entire life of the system.

Safe

AI systems should not "result in a condition that endangers human life, health, property, or the environment under defined conditions." Safe operation of AI systems is enhanced by the following measures:

- Responsible design, development, and deployment practices;

- Clear information for deployers on how to use the system responsibly;

- Responsible decision making by deployers and end users; and

- Explanations and documentation of risks based on empirical evidence of incidents.

Different types of security risks may require customized AI risk management approaches based on the context and severity of potential risks. Security risks that pose a potential risk of serious injury or death require the most urgent prioritization and a most thorough risk management process.

Resilience

Artificial intelligence systems, as well as the ecosystems in which they are deployed, can be said to be resilient if they can withstand unexpected adverse events or unexpected changes in their environment or deployment-or if they can maintain their functions and structure in the face of internal and external changes, and safely and gracefully decline when necessary.... Common security concerns include adversarial examples, data poisoning, and the export of models, training data, or other intellectual property through AI system endpoints. AI systems that can maintain confidentiality, integrity, and availability through safeguards that prevent unauthorized access and use can be said to be secure.

Accountable and Transparent

Trustworthy AI is based on accountability. Accountability requires transparency. Transparency reflects the extent to which information about an AI system and its outputs is available to people who interact with such a system, whether they are aware of it or not. Meaningful transparency provides access to appropriate levels of information that are aligned with the developmental stage of the AI and the roles or knowledge of the AI stakeholders or individuals who interact with or use the AI system. By promoting higher levels of understanding, transparency increases confidence in the AI system. The scope of this feature ranges from design decisions and training data to model training, the structure of the model, its intended use cases, and how and when deployment, post-deployment, or end-user decisions were made and by whom. Transparency is often required to provide actionable remediation for AI system outputs that are incorrect or otherwise lead to negative impacts.

Explainability and Interpretability

Explainability refers to an account of the mechanisms underlying the operation of AI systems, while interpretability refers to the meaning of AI system output in the context of its intended functional purposes. Together, explainability and interpretability help those who operate or monitor an AI system, as well as users of an AI system, to gain deeper insights into the functionality and trustworthiness of the system, including its output. The underlying assumption is that negative risks are due to a lack of ability to adequately understand or contextualize the system output. Explainable and interpretable AI systems provide information that helps end users understand the purposes and potential effects of an AI system. Risks due to lack of explainability can be managed by describing the operation of AI systems according to individual differences such as the user's role, knowledge, and skill. Explainable systems are easier to debug and monitor and lend themselves to more thorough documentation, testing, and governance.

Privacy

Privacy generally refers to the norms and practices that help protect human autonomy, identity, and dignity. These norms and practices typically address freedom from intrusion, restricting observation, or the agency of individuals to consent to the disclosure or control of facets of their identities (e.g., body, data, reputation). Privacy values such as anonymity, confidentiality, and control should typically inform AI system design, development, and deployment decisions. Privacy-related risks can influence security, bias, and transparency, and come with trade-offs with these other features. Like safety and security, certain technical features of an AI system can promote or reduce privacy. AI systems may also pose new privacy risks by allowing individuals to be identified or by inferring previously private information about individuals.

Fair

Fairness in AI encompasses issues of equity and justice by addressing problems such as harmful bias and discrimination. Standards of fairness can be complex and difficult to define because perceptions of fairness vary across cultures and may vary depending on the application. Organizations' risk management efforts are enhanced by recognizing and addressing these differences. Systems in which harmful biases are mitigated are not necessarily fair. For example, systems in which predictions are somewhat balanced for different demographic groups may be inaccessible to people with disabilities or impacted by the digital divide, or may exacerbate existing disparities or systemic biases.

Conclusion

The Artificial Intelligence Risk Management Framework (AI RMF 1.0) is a framework to help organizations meet risk management and compliance requirements when using artificial intelligence (AI). It provides a structured way to identify, assess, and manage risks associated with implementing AI systems. It includes guidelines to help organizations develop, implement, and monitor AI systems, as well as tools to help organizations meet compliance requirements. In order for an AI system to be designated as trustworthy, it must meet certain criteria that are of value to interested parties. These include: Validity and reliability, security, resilience, accountability and transparency, explainability and interpretability, privacy, and fairness. These characteristics are necessary to designate an AI system as trustworthy.

Contact

Curious? Convinced? Interested?

Schedule a no-obligation initial consultation with one of our sales representatives. Use the following link to select an appointment: